本文最后更新于 290 天前,其中的信息可能已经有所发展或是发生改变。

协会内曾有台esxi跑着一个小小的k8s集群,算是圣遗物了,某次腾空间就给删掉了

后来的时间也数次阅读文档,尝试先了解概念,再上手玩,结果在页面结尾的一次次跳转中迷失了方向,除了几个云里雾里的名词外一无所获

跳过概念,直接上手,玩着玩着就一知半解了

先准备点儿服务器

ESXI Ubuntu22.04 * 4,一台做master,剩下的为节点

装点儿软件

选了最小安装,好多东西都没有

apt update

apt install open-vm-tools vim ntpdate apt-transport-https ca-certificates curl gpg -y 关闭虚拟内存

不关将直接导致后续containerd无法正常运行

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab写死hosts

cat<<EOF>/etc/hosts

10.0.0.x k8s-master

10.0.0.x k8s-node1

10.0.0.x k8s-node2

10.0.0.x k8s-node3配置一下转发

echo "net.ipv4.ip_forward=1" >> /etc/sysctl.d/k8s.conf

echo "br_netfilter" >> /etc/modules-load.d/k8s.conf

sysctl -p /etc/sysctl.d/k8s.conf安装containerd

wget https://github.com/containerd/containerd/releases/download/v1.7.23/containerd-1.7.23-linux-amd64.tar.gz

wget https://github.com/opencontainers/runc/releases/download/v1.1.15/runc.amd64

wget https://github.com/containernetworking/plugins/releases/download/v1.6.0/cni-plugins-linux-amd64-v1.6.0.tgz

wget https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

mv containerd.service /etc/systemd/system/

systemctl enable containerd && systemctl daemon-reload

tar Cxzvf /usr/local containerd-1.7.23-linux-amd64.tar.gz

install -m 755 runc.amd64 /usr/local/sbin/runc

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.0.tgz安装kube*

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.31/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.31/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

apt update && apt install -y kubelet kubeadm kubectl && apt-mark hold kubelet kubeadm kubectl配置containerd

mkdir /etc/containerd

containerd config default > /etc/containerd/config.toml

# 修改[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

crictl config --set runtime-endpoint=unix:///run/containerd/containerd.sock

systemctl restart containerd自动补全

apt install install bash-completion

source /usr/share/bash-completion/bash_completion

echo "source <(kubectl completion bash)" >> ~/.profiles初始化kubernetes

只需在master上配置即可

kubeadm config print init-defaults > /etc/kubernetes/init-default.yaml

# 修改 init-default.yaml 中的 IP 字段

kubeadm init --control-plane-endpoint=[your master ip or dns]

export KUBECONFIG=/etc/kubernetes/admin.conf将得到类似信息,其中包括添加节点的命令,在每个节点上运行即可连接至master

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a Pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join <control-plane-host>:<control-plane-port> --token <token> --discovery-token-ca-cert-hash sha256:<hash>安装Pod网络附加组件Calico

wget https://docs.projectcalico.org/manifests/calico.yaml

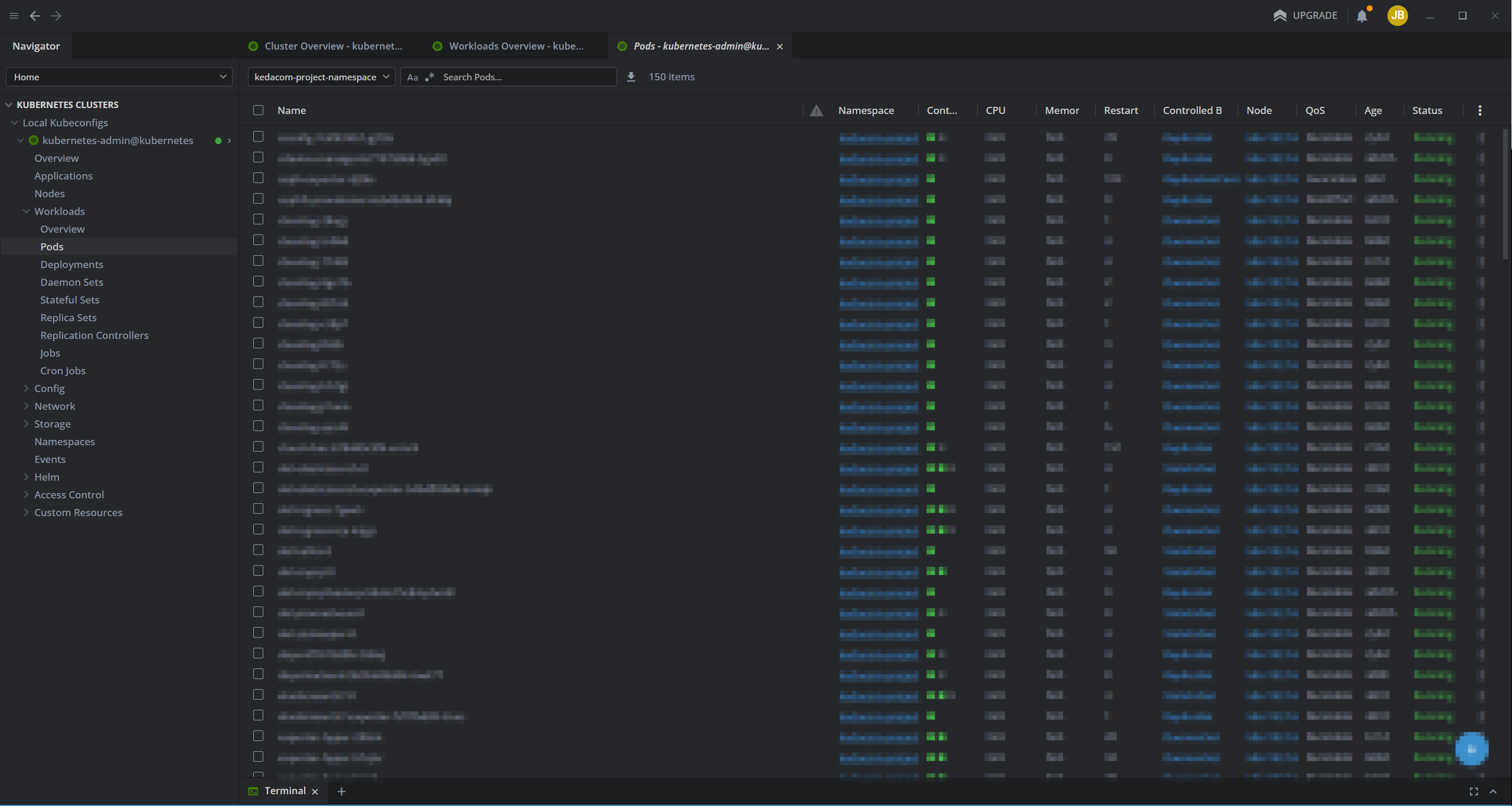

kubectl apply -f calico.yaml以dashboard为测试

通过helm安装kubernetes-dashboard

snap install helm --classic

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard生成一个cluster-admin权限的token,用于kubernetes-dashboard获取集群状态

apiVersion: v1

kind: ServiceAccount

metadata:

name: jbnrz-admin

namespace: kubernetes-dashboard

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: jbnrz-cluster-admin

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: jbnrz-admin

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

name: jbnrz-admin

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: jbnrz-admin

type: kubernetes.io/service-account-tokenkubectl apply -f admin.yaml

kubectl -n kubernetes-dashboard get secrets jbnrz-admin -o jsonpath={".data.token"} | base64 -d前台转发端口

# 容器443转发至8443

kubectl -n kubernetes-dashboard port-forward services/kubernetes-dashboard-kong-proxy 8443:443 --address 0.0.0.0结束

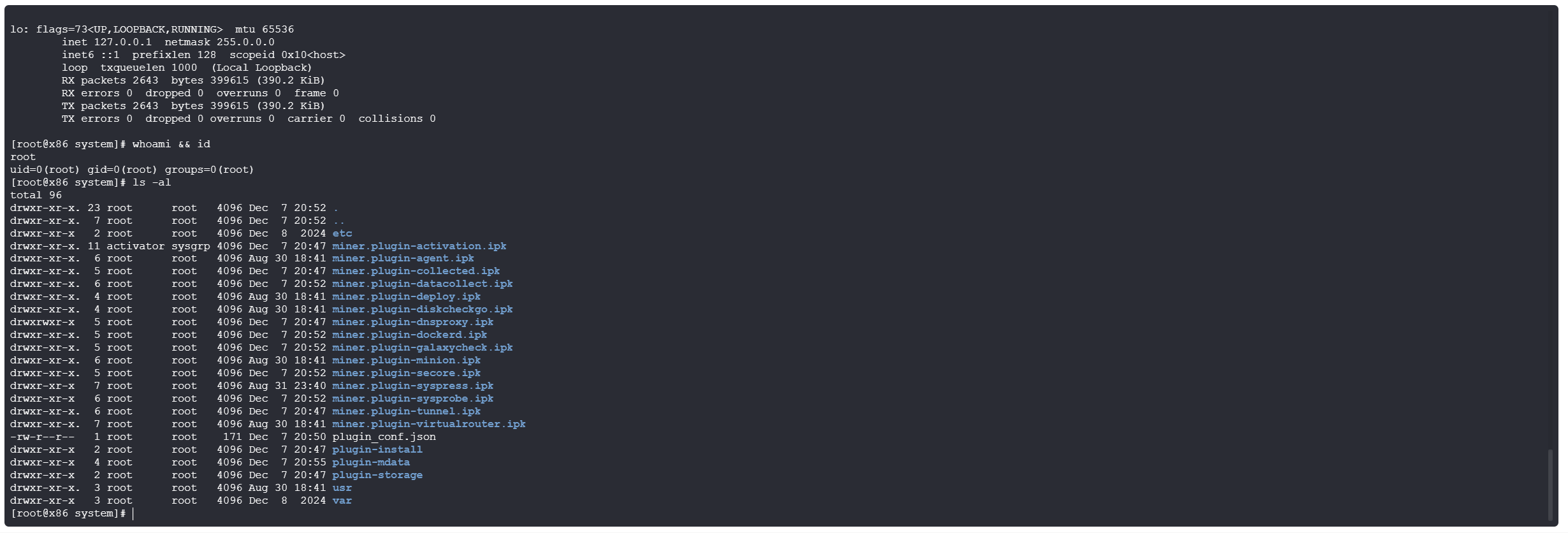

root@k8s-master:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6879d4fcdc-m7br8 1/1 Running 5 (28m ago) 47h

kube-system calico-node-f45fv 1/1 Running 2 (32m ago) 47h

kube-system calico-node-n5tgk 1/1 Running 2 (32m ago) 47h

kube-system calico-node-pn2f6 1/1 Running 3 (32m ago) 47h

kube-system calico-node-wvlm6 1/1 Running 2 (32m ago) 47h

kube-system coredns-7c65d6cfc9-9w2rz 1/1 Running 2 (32m ago) 2d

kube-system coredns-7c65d6cfc9-q9s7n 1/1 Running 2 (32m ago) 2d

kube-system etcd-k8s-master 1/1 Running 3 (32m ago) 2d

kube-system kube-apiserver-k8s-master 1/1 Running 4 (32m ago) 2d

kube-system kube-controller-manager-k8s-master 1/1 Running 6 (32m ago) 2d

kube-system kube-proxy-226gm 1/1 Running 2 (32m ago) 2d

kube-system kube-proxy-97d8w 1/1 Running 2 (32m ago) 2d

kube-system kube-proxy-fbjc7 1/1 Running 3 (32m ago) 2d

kube-system kube-proxy-gxzqw 1/1 Running 2 (32m ago) 2d

kube-system kube-scheduler-k8s-master 1/1 Running 7 (32m ago) 2d

kubernetes-dashboard kubernetes-dashboard-api-f95446c4d-bmw4l 1/1 Running 4 (27m ago) 47h

kubernetes-dashboard kubernetes-dashboard-auth-75f5bfd4b9-p4c7v 1/1 Running 2 (32m ago) 47h

kubernetes-dashboard kubernetes-dashboard-kong-57d45c4f69-4k592 1/1 Running 3 (27m ago) 47h

kubernetes-dashboard kubernetes-dashboard-metrics-scraper-5f7678d695-sf5pm 1/1 Running 2 (32m ago) 47h

kubernetes-dashboard kubernetes-dashboard-web-7787947b64-fcdhr 1/1 Running 2 (32m ago) 47h